William J. Clancey 1985

2. THE HEURISTIC CLASSIFICATION METHOD DEFINED

3. EXAMPLES OF HEURISTIC CLASSIFICATION

4. UNDERSTANDING HEURISTIC CLASSIFICATION

4.2. Alternative encodings of schemas

4.3. Relating heuristics to conceptual graphs

5. ANALYSIS OF PROBLEM TYPES IN TERMS OF SYSTEMS

6. INFERENCE STRATEGIES FOR HEURISTIC CLASSIFICATION

7. CONSTRUCTIVE PROBLEM SOLVING, AN INTRODUCTION

8. RELATING TOOLS, METHODS, AND TASKS

10. RELATED ANALYSES IN PSYCHOLOGY AND ARTIFICIAL INTELLIGENCE

11. SUMMARY OF KEY OBSERVATIONS

To understand something as a specific instance of a more general case--which is what understanding a more fundamental principle or structure means--is to have learned not only a specific thing but also a model for understanding other things like it that one may encounter. (Bruner, 1960)

The views and conclusions contained in this document are those of the authors and should not be interpreted as necessarily representing the official policies.

Approved for public release; distribution unlimited. Reproduction in whole or in part is permitted for any purpose of the United States Government.

A broad range of well-structured problems-embracing forms of diagnosis, catalog selection, and skeletal planning-are solved in “expert systems*’ by the method of heuristic classification. These programs have a characteristic inference structure that systematically relates data to a pre-enumerated set of solutions by abstraction, heuristic association, and refinement. In contrast with previous descriptions of classification reasoning, particularly in psychology, this analysis emphasizes the role of a heuristic in routine problem solving as a non-hierarchical, direct association between concepts. In contrast with other descriptions of expert systems, this analysis specifies the knowledge needed to solve a problem, independent of its representation in a particular computer language. The heuristic classification problem-solving model provides a useful framework for characterizing kinds of problems, for designing representation tools, and for understanding non-classification (constructive) problem-solving methods.

Over the past decade, a variety of heuristic programs,

commonly called “expert systems,” have been written to solve problems in diverse

areas of science, engineering, business, and medicine. Developing these programs

involves satisfying an interacting set of requirements: Selecting the application

area and specific problem to be solved, bounding the problem so that it is computationally

and financially tractable, and implementing a prototype program-to name

a few obvious concerns. With continued experience, a number of programming environments

or “tools” have been developed and successfully used to implement prototype

programs (Hayes-Roth, et al., 1983). Importantly, the representational units

of tools (such as “rules” and - “attributes”) provide an orientation for

identifying manageable subproblems and organizing problem analysis. Selecting

appropriate applications now often takes the form of relating candidate problems

to known computational methods, our tools.

Yet, in spite of this experience, when presented with a given

*‘knowledge engineering tool,” such as EMYCIN (van Melle, 1979), we are still

hard-pressed to say what kinds of problems it can be used to solve well. Various

studies have demonstrated advantages of using one representation language instead

of another-for ease in specifying knowledge relationships, control of reasoning,

and perspicuity for maintenance and explanation (Swartout, 1981, Aiello, 1983,

Aikins, 1983, Clancey, 1983a, Clancey and Letsinger, 1984). Other studies have

characterized in low-level terms why a given problem might be inappropriate

for a given language, for example, because data are time-varying or subproblems

interact (Hayes-Roth, etal., 1983). While these studies reveal the weaknesses

and limitations of the rule-based formalism, in particular, they do not clarify

the form of analysis and problem decomposition that has been so successfully

used in these programs. In short, attempts to describe a mapping between kinds

of problems and programming languages have not been satisfactory because

they don’t describe what a given program knows: applications-oriented

descriptions like “diagnosis” are too general (e.g., solving a diagnostic

problem doesn’t necessarily require a device model), and technological terms

like “rule-based” don’t describe what kind of problem is being solved (Hayes,

1977, Hayes, 1979). We need a better description of what heuristic programs

do and know-a computational characterization of their competence-independent

of task and independent of programming language implementation. Logic has been

suggested as a basis for a “knowledge-level” analysis to specify what a heuristic

program does and might know (Nilsson, 1981, Newell, 1982). However, we have

lacked a set of terms and relations for doing this.

In an attempt to characterize the knowledge-level

competence of a variety of expert systems, a number of programs were

analyzed in detail. There is a striking pattern: These programs proceed through

easily identifiable phases of data abstraction, heuristic mapping onto a hierarchy

of pre-enumerated solutions, and refinement within this hierarchy. In short,

these programs do what is commonly called classification, but

with the important twist of relating concepts in different

classification hierarchies by non-hierarchical, uncertain inferences.

We call this combination of reasoning heuristic classification.

Note carefully: The heuristic classification model

characterizes a form of knowledge and reasoning-patterns of familiar

problem situations and solutions, heuristically related. In capturing problem

situations that tend to occur and solutions that tend to work, this knowledge

is essentially experiential, with an overall form that is problem-area

independent. Heuristic classification is a method of computation, not a kind

of problem to be solved. Thus, we refer to “the heuristic classification method,”

not “classification problem.”

Focusing on epistemological content rather than

representational notation, this paper proposes a set of terms and relations

for describing the knowledge used to solve a problem by the heuristic classification

method. Subsequent sections describe and illustrate the model in the analysis

of MYCTN, SACON, GRUNDY, and SOPHIE TIT. Significantly, a knowledge-level description

of these programs corresponds very well to psychological models of expert problem

solving. This suggests that the heuristic classification problem-solving model

captures general principles of how experiential knowledge is organized and used,

and thus generalizes some, cognitive science results. A thorough discussion

relates the model to schema research; and use of a conceptual graph notation

shows how the inference-structure diagram characteristic of heuristic classification

can be derived from some simple assumptions about how data and solutions are

typically related (Section 4). Another detailed discussion then considers “what

gets selected,” possible kinds of solutions (e.g., diagnoses). A taxonomy of

problem types is proposed that characterizes solutions of problems

in terms of synthesis or analysis of some system in the world

(Section 5). We finally turn to the issue of inference control in order to further

characterize tool requirements for heuristic classification (Section 6), segueing

into a brief description of constructive problem solving (Section 7).

This paper explores different perspectives for describing

expert systems; it is not a conventional description of a particular program

or programming language . The analysis does produce some specific and obviously

useful results, such as a distinction between electronic and medical diagnosis

programs (Section 6.2). But there are also a few essays with less immediate

payoffs, such as the analysis of problem types in terms of systems (Section

5) and the discussion of the pragmatics of defining concepts (Section 4.5).

Also, readers who specialize in problems of knowledge representation should

keep in mind that the discussion of schemas (Section 4) is an attempt to clarify

the knowledge represented in rule-based expert systems, rather than to introduce

new representational ideas.

From another perspective, this paper presents a

methodology for analyzing problems, preparatory to building an expert system.

It introduces an intermediate level of knowledge specification, more abstract

than specific concepts and relations, but still independent of implementation

language. Indeed, one aim is to afford a level of awareness for describing expert

system design that enables knowledge representation languages to be chosen and

used more deliberately.

We begin with the motivation of wanting to formalize

what we have learned about building expert systems. How can we classify problems?

How can we select problems that are appropriate for our tools? How can we improve

our tools? Our study reveals patterns in - knowledge bases: Inference chains

are not arbitrary sequences of implications, they compose relations among concepts

in a systematic way. Intuitively, we believe that understanding these high-level

knowledge structures, implicitly encoded in today’s expert systems, will enable

us to teach people how to use representation languages more effectively, and

also enable us to design better languages. Moreover, it is a well-established

principle for designing these programs that the knowledge people are trying

to express should be stated explicitly, so it will be accessible to auxiliary

programs for explanation, teaching, and knowledge acquisition (e.g., (Davis,

1976)).

Briefly, our methodology for specifying the knowledge contained in an expert system is based on:

a computational distinction between selection and construction of solutions;

a relational breakdown of concepts, distinguishing between abstraction and heuristic association and between subtype and cause, thus revealing the classification nature of inference chains; and

a categorization of problems in terms of synthesis and analysis of systems in the world, allowing us to characterize inference in terms of a sequence of classifications involving some system.

The main result of the study is the model of heuristic classification,

which turns out to be a common problem-solving method in expert systems. Identifying

this computational method is not to be confused with advocating its use. Instead,

by giving it a name and characterizing it, we open the way to describing when

it is applicable, contrasting it with alternative methods, and deliberately

using it again when appropriate.

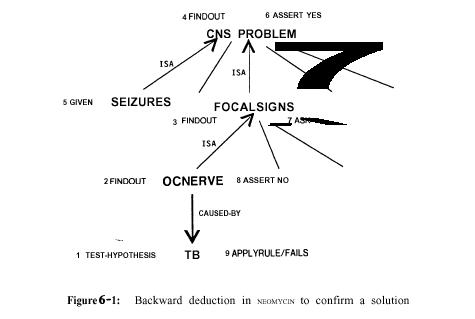

As one demonstration of the value of the model, classification

in well-known medical and electronic diagnosis programs is described in some

detail, contrasting different perspectives on what constitutes a diagnostic

solution and different methods for controlling inference to derive coherent

solutions. Indeed, an early motivation for this study was to understand how

NEOMYCIN, a medical diagnostic program, could be generalized. The resulting

tool, called HERACLES (roughly standing for “Heuristic Classification Shell”)

is described briefly, with a critique of its capabilities in terms of the larger

model that has emerged.

In the final sections of the paper, we reflect on the adequacy

of current knowledge engineering tools, the nature of a knowledge-level analysis,

and related research in psychology and artificial intelligence. There are several

strong implications for the practice of building expert systems, designing new

tools, and continued research in this field. Yet to be delivered, but promised

by the model, are explanation and teaching programs tailored to the heuristic

classification model, better knowledge acquisition programs, and demonstration

that thinking in terms of heuristic classification makes it easier to choose

problems and build new expert -systems.

2. THE HEURISTIC CLASSIFICATION METHOD DEFINED

We develop the idea of the heuristic classification method by starting with the common sense notion of classification and relating it to the reasoning that occurs in heuristic programs.

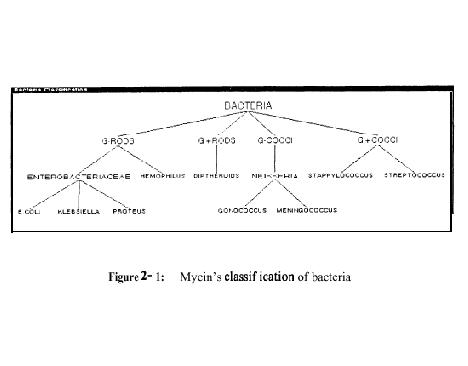

As the name suggests, the simplest kind of classification is identifying some unknown object or phenomenon as a member of a known class of objects, events, or processes. Typically, these classes are stereotypes that are hierarchically organized, and the process of identification is one of matching observations of an unknown entity against features of known classes. A paradigmatic example is identification of a plant or animal, using a guidebook of features, such as coloration, structure, and size. MYCIN solves the problem of identifying an unknown organism from laboratory cultures by matching culture information against a hierarchy of bacteria (Figure 2-l).

The essential characteristic of classification is that the problem solver selects from a set of pre-enumerated solutions. This does not mean, of course, that the “right answer” is necessarily one of these solutions, just that the problem solver will only attempt to match the data against the known solutions, rather than construct a new one. Evidence can be uncertain and matches partial, so the output might be a ranked list of hypotheses. Besides matching, there are several rules of inference for making assertions about solutions. For example, evidence for a class is indirect evidence that one of its subtypes is present.

In the simplest problems, data are solution features, so the matching process is direct. For example, an unknown organism in MYCIN can be classified directly given the supplied data of Gram stain and morphology. The features “Gram-stain negative” and “rod-shaped” match a class of organisms. The solution might be refined by getting information that allows subtypes to be discriminated.

For many problems, solution features are not supplied as data, but are inferred by data abstraction. There are three basic relations for abstracting data in heuristic programs:

definitional abstraction based on essential, necessary features of a concept (“if the structure is a one-dimensional network, then its shape is a beam”);

qualitative abstraction, a form of definition involving quantitative data, usually with respect to some normal or expected value (“if the patient is an adult and white blood count is less than 2500, then the white blood count is low”); and

generalization in a subtype hierarchy (“if the client is a judge, then he is an educated person”).

These interpretations are usually made by the program with certainty; belief thresholds and qualifying conditions are chosen so the abstraction is categorical. It is common to refer to this knowledge as being “factual” or “definitional.”

In simple classification, data may directly match solution features or may match after being abstracted. In heuristic classification, solutions and solution features may also be matched heuristically, by direct, non-hierarchical association with some concept in another classification hierarchy. For example, MYCIN does more than identify an unknown organism in terms of visible features of an organism: MYCIN heuristically relates an abstract characterization of the patient to a classification of diseases. We show this inference structure schematically, followed by an example (Figure 2-2).

Figure 2-2 : Inference structure of MYCIN

Basic observations about the patient are abstracted to patient

categories, which are heuristically linked to diseases and disease categories.

While only a subtype link with E.coli infection is shown here, evidence may

actually derive from a combination of inferences. Some data might directly match

E.coli features (an individual organism shaped like a rod and producing a Gram-negative

stain is seen growing in a culture taken from the patient). Descriptions of

laboratory cultures (describing location, method of collection, and incubation)

can also be related to the classification of diseases.

The important link we have added is a heuristic association between

a characterization of the patient (“compromised host”) and categories of diseases

(“gram-negative infection”). Unlike definitional and hierarchical inferences,

this inference makes a great leap. A heuristic relation is uncertain, based

on assumptions of typicality, and is sometimes just a poorly understood correlation.

A heuristic is often empirical, deriving from problem-solving experience; heuristics

correspond to the “rules of thumb,” often associated with expert systems (Feigenbaum,

1977).

Heuristics of this type reduce search by skipping over intermediate

relations (this is why we don’t call abstraction relations *‘heuristics”).

These associations are usually uncertain because the intermediate relations

may not hold in the specific case. Intermediate relations may be omitted because

they are unobservable or poorly understood. In a medical diagnosis program,

heuristics typically skip over the causal relations between symptoms and diseases.

In Section 4 we will analyze the nature of these implicit relations in

some detail.

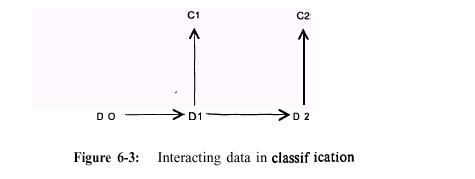

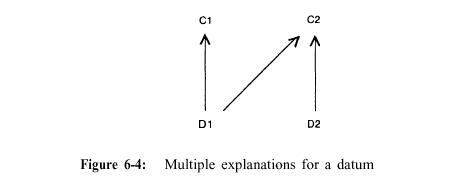

To summarize, in heuristic classification abstracted data statements

are associated with specific problem solutions or features that characterize

a solution. This can be shown schematically in simple terms (Figure 2-3).

Figure 2-3 : Inference structure of heuristic classification

This diagram summarizes how a distinguished set of terms (data, data abstractions, solution abstractions, and solutions) are related systematically by different kinds of relations. This is the structure of inference in heuristic classification. The direction of inference and the relations “abstraction’* and “refinement” are a simplification, indicating a common ordering (generalizing data and refining solutions), as well as a useful way of remembering the classification model. In practice, there are many operators for selecting and ordering inferences, discussed in Section 6.

3. EXAMPLES OF HEURISTIC CLASSIFICATION

Here we schematically describe the architectures of SACON, GRUNDY,

and SOPHIE III in terms of heuristic classification. These are brief descriptions,

but reveal the value of this kind of analysis by helping us to understand what

the programs do. After a statement of the problem, the general inference structure

and an example inference path are given, followed by a brief discussion. In

looking at these diagrams, note that sequences of classifications can be composed,

perhaps involving simple classification at one stage (SACON) or omitting “abstraction”

or “refinement” (GRUNDY and SACON).

In the Section 4, we will reconsider these examples, in an attempt

to understand the heuristic classification pattern. Our approach will be to

pick apart the “inner structure” of concepts and to characterize the kinds

of relations that are typically useful for problem solving.

Problem: SACON (Bennett, et al., 1978) selects classes of behavior

that should be further investigated by a structural-analysis simulation program

(Figure 3-l).

Discussion: SACON solves two problems by classification-heuristically

analyzing a structure and then using simple classification to select a program.

It begins by heuristically selecting a simple numeric model for analyzing a

structure (such as an airplane wing). The numeric model, an equation, produces

stress and deflection estimates, which the program then qualitatively abstracts

as behaviors to study in more detail. These behaviors, with additional information

about the material, definitionally characterize different configurations of

the MARC simulation program (e.g., the inelastic-fatigue program). There is

no refinement because the solutions to the first problem are just a simple set

of possible models, and the second problem is only solved to the point of specifying

program classes. (In another software configuration system we analyzed, specific

program input parameters are inferred in a refinement step.)

Problem: GRUNDY (Rich, 1979) is a model of a librarian, selecting books a person might like to read.

Discussion: GRUNDY solves two classification problems heuristically,

classifying a reader’s personality and then selecting books appropriate to

this kind of person (Figure 3-2). While some evidence for people stereotypes

is by data abstraction (a JUDGE can be inferred to be an EDUCATED-PERSON), other

evidence is heuristic (watching no TV is neither a necessary nor sufficient

characteristic of an EDUCATED-PERSON).

Illustrating the power of a knowledge-level analysis, we discover that

the people and book classifications are not distinct in the implementation.

For example, “fast plots” is a book characteristic, but in the implementation

“likes fast plots” is associated with a person stereotype. The relation between

a person stereotype and “fast plots” is heuristic and should be a distinguished

from abstractions of people and books. One objective of the program is to learn

better people stereotypes (user models). The classification description of the

user modeling problem shows that GRUNDY should also be learning better ways

to characterize books, as well as improving its heuristics. If these are not

treated separately, learning may be hindered. This example illustrates why a

knowledge-level analysis should precede representation.

It is interesting to note that GRUNDY does not attempt to perfect

the user model before recommending a book. Rather, refinement of the person

stereotype occurs when the reader rejects book suggestions. Analysis of other

programs indicates that this multiple-pass process structure is common. For

example, the Drilling Advisor makes two passes on the causes of drill sticking,

considering general, inexpensive data first, just as medical programs commonly

consider the “history and physical” before laboratory data. The high-level,

abstract structure of the heuristic classification model makes possible these

kinds of descriptions and comparisons.

Figure 3-1 : Inference structure of SACON

Figure 3-2 : Inference structure of GRUNDY

Problem: SOPHIE III (Brown, et al., 1982) classifies an electronic circuit in terms of the component that is causing faulty behavior (Figure 3-3).

Figure 3-3 : Inference structure of SOPHIE

Discussion: SOPHIE'S set of pre-enumerated solutions is a lattice of valid and faulty circuit behaviors. In contrast with MYCIN, SOPHIE'S solutions are device states and component flaws, not stereotypes of disorders. They are related causally, not by subtype. Data are not only external device behaviors, but include internal component measurements propagated by the causal analysis of the LOCAL program. Nevertheless, the inference structure of abstractions, heuristic relations, and refinement fits the heuristic classification model, demonstrating its generality and usefulness.

4. UNDERSTANDING HEURISTIC CLASSIFICATION

The purpose of this section is to develop a principled account of why the inference structure of heuristic classification takes the characteristic form we have discovered. Our approach is to describe what we have heretofore loosely called “classes,” “concepts,” or “stereotypes” in a more formal way, using the conceptual graph notation of Sowa (Sowa, 1984). In this formalism, a concept is described by graphs of typed, usually binary relations among other concepts. This kind of analysis has its origins in semantic networks (Quillian, 1968), the conceptual-dependency notation of Schank, et al. (Schank, 1975), the prototype/perspective descriptions of KRL (Bobrow and Winograd, 1979), the classification hierarchies of KL-ONE (Schmolze and Lipkis, 1983), as well as the predicate calculus.

Our discussion has several objectives:

to relate the knowledge encoded in rule-based systems to structures more commonly associated with “semantic net” and “frame” formalisms,

to explicate what kinds of knowledge heuristic rules leave out (and thus their advantages for search efficiency and limitations for correctness), and

to relate the kinds of conceptual relations collectively identified in knowledge representation research (e.g., the relation between an individual and a class) with the pattern of inference that typically occurs during heuristic classification problem solving (yielding the characteristic inverted horseshoe inference structure of Figure 2-3).

One important result of this analysis is a characterization of the “heuristic relation” in terms of primitive relations among concepts (such as preference, accompaniment, and causal enablement), and its difference from more essential, “definitional" characterizations of concepts. In short, we are trying to systematically characterize the kind of knowledge that is useful for problem solving, which relates to our larger aim of devising useful languages for encoding knowledge in expert systems.

In the case of matching features of organisms (MYCIN) or programs

(SACON), features are essential (necessary), identifying characteristics of

the object, event, or process. This corresponds to the Aristotelian notion of

concept definition in terms of necessary properties In contrast, features may

be only “incidental,” corresponding to typical manifestations or behaviors.

For example, E.coli is normally found in certain parts of the body, an incidental

property. It is common to refer to the combination of incidental and defining

associations as a “schema” for the concept. Inferences made using incidental

associations of a schema are inherently uncertain. For example, we might infer

that a particular person, because he is educated, likes to read books, but this

might not be true. In contrast, an educated person must, by definition, have

learned a great deal about something (though maybe not a formal academic topic).

The nature of schemas and their representation has been studied

extensively in AI. As stated in the introduction (Section l), our purpose here

is to exploit this research to understand the knowledge contained in rules.

We are not advocating one representation over another; rather we just want to

find some way of writing down knowledge so that we can detect and express patterns.

We use the conceptual graph notation of Sowa because it is simple and it makes

basic distinctions that we find to be useful:

A schema is made up of coherent statements mentioning a given concept, not a list of isolated, independent features. (A statement is a complete sentence.)

A schema for a given concept contains relations to other concepts, not just “attributes and values” or “slots and values.”

A concept is typically described from different points of view by a set of schemata (called a “schematic cluster’*), not a single “frame.”

The totality of what people know about a concept usually extends well beyond the schemas that are pragmatically encoded in programs for solving limited problems.

Finally, we adopt Sowa’s definition of a prototype as a “typical individual,‘* a specialization of a concept schema to indicate typical values and characteristics, where ranges or sets are described for the class as a whole. Whether a program uses prototype or schema descriptions of its solutions is not important to our discussion, and many may combine them, including “normal” values, as well as a spectrum of expectations.

4.2. Alternative encodings of schemas

To develop the above points in some detail, we will consider

a conceptual graph description and how it relates to typical rule-based encodings.

Figure 4-l shows how knowledge about the concept “cluster headache” is described

using the conceptual graph notation?

Concepts appear in brackets; relations are in parentheses. Concepts

are also related by a type hierarchy, e.g., a HEADACHE is a kind of PROCESS,

an OLDER-MAN is a kind of MAN. Relations are constrained to link concepts of

particular types, e.g., PTIM, a point in time, links a PROCESS to a TIME. For

convenience, we can also use Sowa’s linear notation for conceptual graphs.

Thus, OLDER-MAN can be described as a specialization of MAN, “a man with characteristic

old.” CLUSTERED is “an event occurring daily for a week.” EARLY- SLEEP is

‘ *a few hours after the state of sleep.”

We make no claim that a representation of this kind is complete,

computationally tractable, or even unambiguous. For our purposes here, it is

simply a notation with the advantage over English prose of systematically revealing

how what we know about a concept can be (at least partially) described in terms

of its relations to concepts of other types.

For contrast, consider how this same knowledge might be encoded

in a notation based upon objects, attributes, and values, as in MYCIN. Here,

the object would be the PATIENT, and typical attributes would be HEADACHE-ONSET

(with possible values EARLY-MORNING, EARLY-SLEEP, LATE-AFTERNOON) and DISORDER

(with possible values CLUSTER-HEADACHE, INFECTION, etc.). A typical rule might

be, “If the patient has headache onset during sleep, then the disorder of the

patient is cluster headache.” The features of a cluster headache might be combined

in a single rule. Generally, since none of the features are logically necessary,

they are considered in separate rules, with certainty factors denoting how strongly

the symptom (or predisposition, in the case of age) is correlated with the disease.

[EARLY-SLEEP) is

[TIME: [STATE: [SLEEP]] -> (AFTER) -> [TIME-PERIOD: @few-hrs]]

[CLUSTERED] is

[DAILY] <- (FREQ) <- [EVENT] -> (DURATION) -> [TIME-PERIOD: @1week]

[OLDER-MAN] is

[MAN] -> (CHRC) -> [OLD]

Figure 4-1 : Schema describing the concept CLUSTER-HEADACHE and some related concepts

A primitive “frame” representation, as in INTERNET (Pople, 1982), is similar, with a list of attributes for each disorder, but each attribute is an “atomic” unit that bundles together what is broken into object, attribute, and value in MYCIN, e.g., “HEADACHE-ONSET-OCCURS-EARLY-SLEEP.”

The idea of relating a concept (such ‘as CLUSTER-HEADACHE) to a set of attributes or descriptors, is common in AI programs. However, a relational analysis reveals marked differences in what an attribute might be:

An attribute is an atomic proposition. In INTERNIST, an attribute is a string that is only related to diseases or other strings, e.g., HEADACHE-ONSET-EARLY-SLEEP-EXPERIENCED-BY -PATIENT.

An attribute is a relation characterizing some class of objects. In MYCTN, an attribute is associated with an instance of an object (a particular patient, culture, organism, or drug).

An attribute is a unary relation. A MYCIN attribute with the values “yes or no” corresponds to a unary relation, (<attribute> <object>), e.g., HEADACHE-ONSET-EARLY-SLEEP PATIENT), “headache onset during early sleep is experienced by the patient.”

An attribute is a binary relation. A MYCIN attribute with values corresponds to a binary relation, (<attribute> <object> <value>), e.g., (HEADACHE-ONSET PATIENT EARLY-SLEEP), “headache onset experienced by the patient is during early sleep.”

An attribute is a relation among classes. Each class is a concept. Taking the same example, there are two more primitive relations, ONSET and EXPERIENCER, yielding the propositions: (ONSET HEADACHE EARLY-SLEEP), “the onset of the headache is during early sleep”, and (EXPERIENCER HEADACHE PATIENT), “the experiencer of the headache is the patient.‘* More concisely, [EARLY-SLEEP] <- (ONSET) <- [HEADACHE] -> (EXPR) -> [PATIENT]. These relations and concepts can be further broken down, as shown in Figure 4-l.

The conceptual graph notation encourages clear thinking by forcing

us to unbundle domain terminology into defined or schematically described terms

and a constrained vocabulary of relations (restricted in the types of concepts

each can link). Rather than saying that “an object has attributes,‘* we can

be more specific about the relations among entities, describing abstract concepts

like “headache“ and “cluster” in the same notation we use to describe concrete

objects like patients and organisms. In particular, notice that headache onset

is a characterization of a headache, not of a person, contrary to the MYCIN

statement that “headache onset is an attribute of person .‘ * Similarly, the

relation between a patient and a disorder is different from the relation between

a patient and his age?

Breaking apart “parameters” into concepts and relations has

the additional benefit of allowing them to be easily related, through their

schema descriptions. For example, it is clear that HEADACHE-ONSET and HEADACHE-SEVERITY

both characterize HEADACHE, allowing us to write a simple, general inference

rule for deciding about relevancy: “If a process type being characterized (e.g.,

HEADACHE) is unavailable or not relevant, then its characterization (e.g., HEADACHE-ONSET)

is not relevant.” As another example, consider a discrimination inference strategy

that compares disorder processes on the basis of their descriptions as events.

Knowing what relations are comparable (e.g., location and frequency), the inference

procedure can automatically gather relevant data, look up the schema descriptions,

and make comparisons to establish the best match. To summarize, the rules in

a program like MYCIN are implicitly making statements about schemas. This becomes

clear when we separate conceptual links from rules of inference, as in NEOMYCIN.

4.3. Relating heuristics to conceptual graphs

Given all of the structural and functional statements we

might make about a concept, describing processes and interactions in detail,

some statements will be more useful than others for solving problems. Rather

than thinking of schemas as inert, static descriptions, we are interested in

how they link concepts to solve problems. The description of CLUSTERED-HEADACHE

given in -.Figure 4-l includes the knowledge that one typically finds in a diagnostic

program. To understand heuristics in these terms, consider first that some relations

appear to be less “incidental” than others. The time of occurrence of the

headache, location, frequency, and characterizing features are all closely bound

to what a cluster headache is. They are not necessary, but they together distinguish

CLUSTER-HEADACHE from other types. That is, these relations discriminate this

headache from other types of headache.

On the other hand, accompaniment by lacrimation (tearing of the eyes) and

the tendency for such headaches to be experienced by older men are correlations

with other concepts. Here, in particular, we see the link between different

kinds of entities: a DISORDER-PROCESS and a PERSON. This is the link we have

identified as a heuristic-a direct, non-hierarchical association between concepts

of different types. Observe that why an older man experiences cluster headaches

is left out. Given a model of the world that says that all phenomena are caused,

we can say that each of the links with HEADACHE could be explained causally.

Whether the explanation has been left out or is not known cannot be determined

by examining the conceptual graph, a critical point we will return to later.

When heuristics are stated as rules in a program like MYCIN,

even known relational and definitional details are often omitted. This often

means that intermediate concepts are omitted as well. We say “X suggests Y,

or “X makes you think of Y.” Unless the connection is an unexplained correlation,

such a statement can be expanded to a full sentence that is part of the schema

description of X and/or Y. Thus, the geologist’s rule “goldfields flowers

--> serpentine rock” might be restated as, “Serpentine rock has nutrients

that enable goldfields to grow well.” Figure 4-2 shows the conceptual graph

notation of this statement (with “enable” shown by the relation “instrument”

linking an entity, nutrients, to an act, growing).

Figure 4-2 : A heuristic rule expanded as a conceptual graph

The concepts of nutrients and growing are omitted from the rule

notation, just as the causal details that explain the growth process are skipped

over in the conceptual graph notation. The rule indicates what you must observe

(goldfields flowers growing) and what you can assert (serpentine rock is near

the surface). It captures knowledge not as mere static descriptions, but as

efficient, useful connections for problem solving. Moreover, the example makes

clear the essential characteristic of a heuristic inference-a non-hierarchical

and non-definitional connection between concepts of distinct classes.

Heuristics are selected statements that are useful for inference,

particularly how one class choice constrains another. Consider the goldfields

example. Is the conceptual graph shown in Figure 4-2 a schema for serpentine,

goldfields, nutrient, or all three? First, knowledge is something somebody knows;

whether goldfields is associated with nutrients will vary from person to person.

(And for at least a short time, readers of this paper will think of goldfields

when the word “nutrient” is mentioned.) Second, the real issue is how

knowledge is practically - indexed. The associations a problem solver forms

and the directionality of these associations will depend on the kinds of situations

he is called upon to interpret, and what is given and what is derived. Thus,

it seems plausible that a geologist in the field would see goldfields (data)

and think about serpentine rock (solution). Conversely, his task might commonly

be to find outcroppings of serpentine rock; he would work backwards to think

of observables that he might look for (data) that would indicate the presence

of serpentine. Indeed, he might have many associations with flowers and rocks,

and even many general rules for how to infer rocks (e.g., based on other plants,

drainage properties of the land, slope). Figure 4-3 shows one possible inference

path.

In summary, a heuristic association is a connection that relates

data that is commonly available to the kinds of interpretations the problem

solver is trying to derive. For a physician starting with characteristics of

a person, the patient, connections to diseases will be useful. It must be possible

to relate new situations to previous interpretations and this is what the abstraction

process in classification is all about (recall the quotation from Bruner in

Section 1). The specific person becomes an “old man” and particular disorders

come to mind.

Figure 4-3 : Using a general rule to work backwards from a solution

Problems tend to start with objects in the real world, so it makes sense that practical problem-solving knowledge would allow problems to be restated in terms of stereotypical objects: kinds of people, kinds of patients, kinds of stressed structures, kinds of malfunctioning devices, etc. Based on our analysis of expert systems, links from these data concepts to solution concepts come in different flavors:

agent or experiencer (e.g., people predisposed to diseases)

cause, co-presence, or correlation (e.g., symptoms related to faults)

preference or advantage (e.g., people related to books)

physical model (e.g., abstract structures related to numeric models)

These relations don’t characterize a solution in terms of *‘immediate

properties**-they are not definitional or type discriminating. Rather, they

capture incidental associations between a solution and available data, usually

concrete concepts. (Other kinds of links may be possible;these are the ones

we have discovered so far.)

The essential characteristic of a heuristic is that it reduces

search. A heuristic rule reduces a conceptual graph to a single relation between

two concepts. Through this, heuristic rules reduce search in several ways:

While not having to think about intermediate connections is advantageous, this sets up a basic conflict for the problem solver-his inferential leaps may be wrong. Another way of saying that the problem solver skips over things is that there are unarticulated assumptions on which the interpretation rests. We will consider this further in the section on inference strategies (Section 6).

4.4. Relating inference structure to conceptual graphs

In the inference-structure diagrams (such as Figure 3-2) nodes stand for propositions (e.g.,“the reader is an educated person”). The diagrams relate propositions on the basis of how they can be inferred from one another: type, definition, and heuristic. So far in this section we have broken apart these atomic propositions to distinguish a heuristic link from essential and direct characterizing relations in a schema; and we have argued how direct, accidental connections between concepts, which leave out intermediate relations, are valuable for reducing search.

Figure 4-4 : Conceptual relations used in heuristic classification

Here we return to the higher-level, inference-structure diagrams

and include the details of the kinds of links that are possible. In Figure 4-4

each kind of inference relation between concepts is shown as a line. Classes

can be connected to one another by any of these three kinds of inference relations.

We make a distinction between heuristics (direct, non-hierarchical, class-class

relations, such as the link between goldfields and serpentine rock) and definitions

(including necessary and discriminating relations, plus qualitative abstraction

(see Section 2.2)). Definitional and subtype links are shown vertically, to

conform to our intuitive idea of generalization of data to higher categories,

what we have called data abstraction.

It is important to remember that the *‘definitional’* links

are often non-essential, “soft” descriptions. The “definition” of leukopenia

as white blood count less than normal is a good example. “Normal“ depends

on everything else happening to the patient, so inferring this

condition always involves making some assumptions.

Note also that this is a diagram of static, structural relations.

In actual problem solving other links will be required to form a case-specific

model, indicating propositions the problem solver believes to be true and support

for them. In particular, surrogates (Sowa, 1984) (also called individuals (Brachman,

1977), such the MYCIN “context” ORGANISM-l) will stand for unknown objects

or processes in the world that are identified by associating them with a class

in a type hierarchy?

Now we are ready to put this together to understand the pattern

behind the inference structure of heuristic classification. Given that a sequence

of heuristic classifications, as in GRUNDY, is possible, indeed common, we start

with the simplest case by assuming that data classes are not inferred heuristically.

Instead, data are supplied directly or inferred by definition. When solution

classes are inferred by definition, we have a case of simple classification

(Section 2.1), for example, when an organism is actually seen growing in a laboratory

culture (like a smoking gun). In order to describe an idealized form of heuristic

classification, we leave out definitional inference of solutions. Finally, inference

has the general form that problem descriptions must be abstracted (proceeding

from subclass to class) and partial solutions must be refined (proceeding from

class to subclass).

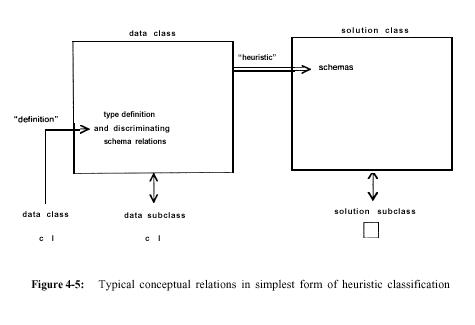

If we thus specialize the right side of the inference diagram

in Figure 4-4 to a data class and a solution class and glue them together, we

get a refined version of the original inverted horseshoe (Figure 2-3). Figure

4-5 shows how data and solution classes are typically inferred from one another

in the simplest case of heuristic classification. This diagram should be contrasted

with all of the possible networks we could construct, linking concepts by the

three most general relations (subtype, definitional, incidental). For example,

all links might have been definitional, all concepts subsumed by a single class,

or data only incidentally related to other concepts. Furthermore, considering

knowledge apart from how it is used, we might imagine complex networks of concepts,

intricately related, as suggested by Figure 4-l. Instead, we find that diverse

classification structures are often linked directly, omitting relational details.

Clearly independent of programming language, this pattern is very likely an

essential aspect of practical, experiential models of the world.

4.5. Pragmatics of defining concepts

In the course of writing and analyzing heuristic programs, we have been struck by the difficulty of defining terms. What is a “compromised host?” How is it different from “immunosuppressed”? Is an alcoholic immunosuppressed? We do not simply write down descriptions of what we know. The very process of formalizing terms and relations changes what we know, and itself brings about concept formation.

In many respects, the apparent unprincipled nature of MYCIN is a good reflection of the raw state of how experts talk. Two problems we encountered illustrate the difficulty of proceeding without a formal conceptual structure, and thus, reflect the unprincipled state of what experts know about their own reasoning:

Twice we completely reworked the hierarchical relations among immunosuppression and compromised host conditions. There clearly is no agreed-upon network that we can simply write down. People do not know schema hierarchies in the same sense that they know phone numbers. A given version is believed to be better because it makes finer distinctions, so it leads to better problem solving.

The concepts of “significant organism’* and “contaminant” were sometimes confused in MYCIN. An organism is significant if there is evidence that it is associated with a disease. A contaminant is an organism growing on a culture that was introduced because of dirty instruments or was picked up from some body site where it normally grows (e.g., a blood culture may be contaminated by skin organisms).Thus, evidence against contamination supports the belief that the discovered organism is significant. However, a rule writer would tend to write “significant” rather than “not contaminant,” even though this was the intended, intermediate interpretation. There may be a tendency to directly form a general, positive categorization, rather than to make an association to an intermediate, ruled-out category.

To, a first approximation, it appears that what we “really” know is what we can conclude given other information. That is, we start with just implication (P -> Q), then go back to abstract concepts into types and understand relations among them. For example, we start by knowing that “WBC < 2500 -> LEUKOPENTA.” To make this principled, we break it into the following pieces:

1. “Leukopenia” means that the count of leukocytes is impoverished:

[LEUKOPENIA] = [LEUKOCYTES] -> (CHRC) -> [CURRENT-COUNT] ->

(CHRC) -> [IMPOVERISHED]

2. “Impoverished” means that the current measure is much less than normal:

[IMPOVERISHED: x] =[CURRENT-MEASURE: x] -> ( << ) -> [NORMAL-MEASURE: x]

3. The (normal/current) count is a kind of measure:

[COUNT] < [MEASURE]

4. A fact, the normal leukocyte count in an adult is 7000:

[LEUKOCYTES] -> (CHRC) ->

[NORMAL-COUNT] -> (MEAS) ->

[MEASURE: 7000 /mm3].

With the proper interpreter (and perhaps some additional definitions

and relations), we could instantiate and compose these expressions to get the

effect of the original rule. This is the pattern we follow in knowledge engineering,

constantly decomposing terms into general types and relations to make explicit

the rationale behind implications.

Perhaps one of the most perplexing difficulties we encounter

is distinguishing between subtype and cause , and between state and process.

Part of the problem is that cause and effect are not always distinguished by

our experts. For example, a physician might speak of a brain-tumor as a kind

of brain-mass-lesion. It is certainly a kind of brain mass, but it causes a

lesion (cut); it is not a kind of lesion. Thus, the concept bundles cause with

effect and location: a Lesion in the brain caused by a mass of some kind is

a brain-mass-lesion (Figure 4-6).

[MASS] -> (CAUS) -> [LESION] -> (LOC) -> [BRAIN]

Figure 4-6: Conceptual graph of the term “brain-mass-lesion’*

Similarly, we draw causal nets linking abnormal

states, saying that brain-hematoma (mass of blood in the brain) is caused by

brain-hemorrhage (bleeding). To understand what is happening, we profit by labeling

brain-hematoma as a substance (a kind of brain-mass) and brain-hemorrhage as

a process that affects or produces the substance. Yet when we began, we thought

of brain-hemorrhage as if it were equivalent to the escaping blood.

It is striking that we can learn concepts and how

to relate them to solve problems, without understanding the links in a principled

way. If you know that WBC < 2500 is leukopenia, a form of immunosuppression,

which is a form of compromised host, causing E.coli infection, you are on your

way to being a clinician. As novices, we push tokens around in the same non-comprehending

way as MYCIN.

Once we start asking questions, we have difficulty figuring out

how concepts are related. If immunosuppression is the state of being unable

to fight infection by mechanisms, then does impoverished white cells cause this

state? Or is it caused by this state (something else affected the immunosystem,

reducing the WBC as a side-effect)? (Worse yet, we may say it is an “indicator,”

completely missing the fact that we are talking about causality.) Perhaps it

is one way in which the immunosystem can be diminished, so it is a kind of immunosuppression.

It is difficult to write down a principled network because we don’t know the

relations, and we don’t know them because we don’t know what the concepts

mean-we don’t understand the processes involved. Yet, we might know enough

to relate data classes to therapy classes and save the patient’s life!

A conceptual graph or logic analysis suggests that the relations among concepts

are relatively few in number and fixed in meaning, compared to the number and

complexity of concepts. The meaning of concepts depends on what we ascribe to

the links that join them. Thus, in practice we jockey around concepts to get

a well-formed network. Complicating this is our tendency to use terms that bundle

cause with effect and to relate substances directly, leaving out intermediate

processes. At first, novices might be like today’s expert programs. A concept

is just a token or label, associated with knowledge of how to infer truth and

how to use information (what to do if it is true and how to infer other truths

from it). Unless the token is defined by something akin to a conceptual graph,

it is difficult to say that the novice or program understands what it means.

But in the world of action, what matters more than the functional, pragmatic

knowledge of knowing what to do?

Where does this leave us? One conclusion is that “principled

networks” are impossible. Except for mathematics, science, economics, and similar

domains, concepts do not have formal definitions. While heuristic programs sometimes

reason with concrete, well-defined classifications such as the programs in SACON

and the fault network in SOPHIE, they more often use experiential schemas, the

knowledge we say distinguishes the expert from the novice. In ‘the worst case,

these experiential concepts are vague and incompletely understood, such as the

diseases in MYCIN. In general, there are underlying (unarticulated or unexamined)

assumptions in every schema description. Thus, the first conclusion is that

for concepts in nonformal domains this background and context cannot in principle

be made explicit (Flores and Winograd, 1985). That is, our conceptual knowledge

is inseparable from our as yet ungeneralized memory of experiences.

An alternative point of view is that, regardless of ultimate

limitations, it is obvious that expert systems will be valuable for replacing

the expert on routine tasks, aiding him on difficult tasks, and generally transforming

how we write down and teach knowledge. Much more can be done in terms of memory

representation, learning from experience, and combinating principled models

with situation/action, pragmatic rules. Specifically, the problem of knowledge

transformation could become a focus for expert systems research, including compilation

for efficiency, derivation of procedures for enhancing explanation (Swartout,

1981), and re-representation for detecting and explaining patterns, thus aiding

scientific theory formation. Studying and refining actual knowledge bases, as

exemplified by this section, is our chief methodology for improving our representations

and inference procedures. Indeed, from the perspective of knowledge transformation,

it is ironic to surmise that we might one day decide that the “superficial”

representation of EMYCIN rules is a fine executable language, and something

like it will become the target for our knowledge compilers.

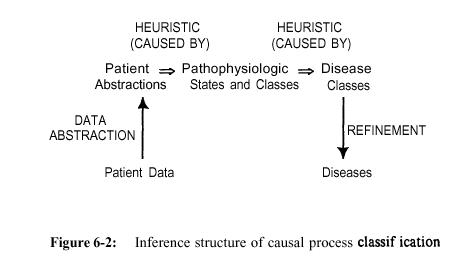

5. ANALYSIS OF PROBLEM TYPES IN TERMS OF SYSTEMS

The heuristic classification model gives us two new angles for comparing problem-solving methods and kinds of problems. First, it suggests that we characterize programs by whether solutions are selected or constructed. This leads us to the second perspective, that different “kinds of things” might be selected or constructed (diagnoses, user models, etc.). In this section we will adopt a single point of view, namely that a solution is most generally a set of beliefs describing what is true about a system or a set of actions (operations) that will physically transform a system to a desired description. We will study variations of system description and transformation problems, leading to a hierarchy of kinds of problems that an expert might solve.

This foray into systems analysis begins very simply with the observation that all classification problem solving involves selection of a solution. We can characterize kinds of problems by what is being selected:

diagnosis: solutions are faulty components (SOPHIE) or processes affecting the device ( MYCIN);

user model: solutions are people stereotypes in terms of their goals and beliefs (first phase of GRUNDY);

catalog selection: solutions are products, services, or activities, e.g., books, personal computers, careers, travel tours, wines, investments (second phase of GRUNDY);

model-based analysis: solutions are numeric models (first phase of SACON);

skeletal planning: solutions are plans, such as packaged sequences of programs and parameters for running them (second phase of SACON, also first phase of experiment planning in MOLGEN (Friedland, 1979)).

Attempts to make knowledge engineering a systematic discipline

often begin with a listing of kinds of problems. This kind of analysis is always

prone to category errors. For example, a naive list of “problems” might list

“design,” “constraint satisfaction,” and “model-based reasoning,” combining

a kind of problem, an inference method, and a kind of knowledge. For example,

one might solve a VLSI chip design problem using constraint satisfaction to

reason about models of circuit components. It is important to adopt a single

perspective when making a list of this kind.

In particular, we must not confuse what gets selected-what constitutes

a solution-with the method for computing the solution. A common misconception

is that there is a kind of problem called a “classification problem,” opposing,

for example, classification problems with design problems (for example, see

(Sowa, 1984)). Indeed, some tasks, such as identifying bacteria from culture

information, are inherently solved by simple classification. However, heuristic

classification as defined here is a description of how a particular problem

is solved by a particular problem solver. If the problem solver has a priori

knowledge of solutions and can relate them to the problem description by data

abstraction, heuristic association, and refinement, then the problem can be

solved by classification. For example, if it were practical to enumerate all

of the computer configurations Rl might select, or if the solutions were restricted

to a predetermined, explicit set of designs, the program could be reconfigured

to solve its problem by classification. The method of solving a configuration

problem is not inherent in the task itself.

With this distinction between problem and computational method

in mind, we turn our attention to a systematic study of problem types. Can we

form an explicit taxonomy that includes the kinds of applications we might typically

encounter?

5.2. Background: Problem categories

One approach might be to focus on objects and what can be done

to them. We can design them, diagnose them, use them in a plan to accomplish

some function, etc. This seems like one way to consistently describe kinds

of problems. Surely everything in the world involves objects.

However, in attempting to derive such a uniform framework, the

concept of “object” becomes a bit elusive. For example, the analysis of problem

types in Building Expert Systems (hereafter BES, (Hayes-Roth, et al., 1983),

see Table 5-l) indirectly refers to a program as an object. Isn’t it really

a process? Are procedures objects or processes? It’s a matter of perspective.

Projects and audit plans can be thought of as both objects and processes. Is

a manufacturing assembly line an object or a process ? The idea of a “system”

appears to work better than the more common focus on objects and processes.

By organizing descriptions of problems around the concept of

a system, we can improve upon the distinctions made in BES. As an example of

the difficulties, consider that a situation description is a description of

a system. Sensor data are observables. But what is the difference between INTERPRETATION

(inferring system behavior from observables) and DIAGNOSIS (inferring system

malfunctions from observables)? Diagnosis, so defined, includes interpretation.

The list appears to deliberately have this progressive design behind it, as

is

| INTERPRETATION PREDICTION DIAGNOSIS DESIGN PLANNING MONITORING DEBUGGING REPAIR INSTRUCTION CONTROL |

Inferring situation descriptions from sensor data Inferring likely consequences of given situation Inferring system malfunctions from observables Configuring objects under constraints Designing actions Comparing observations to plan vulnerabilities Prescribing remedies for malfunctions Executing a plan to administer a prescribed remedy Diagnosis, debugging, and repairing student behavior Interpreting, predicting, repairing, and monitoring system behaviors. |

Table 5-l : Generic categories of knowledge engineering applications.

From

(Hayes-Roth, et al., 1983) Table 1.1, page 14

particularly clear from the last two entries, which are composites

of earlier “applications.” In fact, this idea of multiple “applications”

to something (student behavior, system behavior) suggests that a simplification

might be found by adopting more uniform terminology. As a second example, consider

that the text of BES says that automatic programming is an example of a problem

involving planning. How is that different from configuration under constraints

(i.e., design)? Is automatic programming a planning problem or a design problem?

We also talk about experiment design and experiment planning. Are the two words

interchangeable?

We can get clarity by turning things around, thinking

about systems and what can be done to and with them.

5.3. A system-oriented approach

We start by informally defining a system to be a complex of interacting objects that have some process (I/O) behavior. The following are examples of systems:

a stereo system

a VLSI chip

an organ system in the human body

a computer program

a molecule

a university

an experimental procedure

Webster’s defines a system to be “a set or arrangement of things

so related or connected as to form a unity or organic whole.” The parts taken

together have some structure. It is useful to think of the unity of the system

in terms of how it behaves. Behavior might be characterized simply in terms

of inputs and outputs.

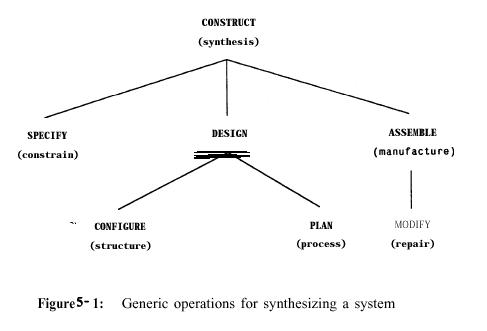

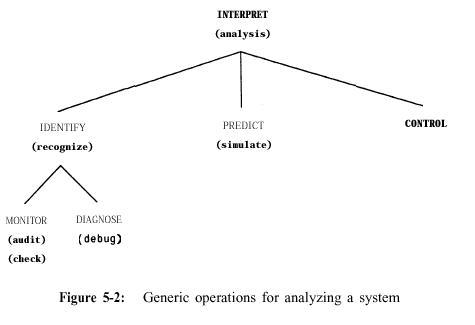

Figures 5-l and 5-2 summarize hierarchically what we can do to

or with a system, revising the BES table. We group operations in terms of those

that construct a system and those that interpret a system, corresponding to

what is generally called synthesis and analysis. Common synonyms appear in parentheses

below the generic operations. In what follows, our new terms appear in upper

case.

INTERPRET operations concern a working system in some environment.

In particular, IDENTIFY is different from DESIGN in that it requires taking

I/O behavior and mapping it onto a system. If the system has not been described

before, then this is equivalent to (perhaps only partial) design from I/O pairs.

PREDICT is the inverse, taking a known system and describing output behavior

for given inputs. (“Simulate” is a specific method for making predictions,

suggesting that there is a computational model of the system, complete at some

level of detail.) CONTROL, not often associated with heuristic programs, takes

a known system and determines inputs to generate prescribed outputs (Vemuri,

1978). Thus, these three operations, IDENTIFY, PREDICT, and CONTROL, logically

cover the possibilities of problems in which one factor of the set (input, output,

system} is unknown.

Both MONITOR and DIAGNOSE presuppose a pre-existing system design

against which the behavior of an actual, “running” system is compared. Thus,

one identifies the system with respect to its deviation from a standard. In

the case of MONITOR, one detects discrepancies in behavior (or simply characterizes

the current state of the system). In the case of DIAGNOSE, one explains monitored

behavior in terms of discrepancies between the actual (inferred) design and

the standard system.

To carry the analysis further, we compare our proposed terms

to those used in Building Expert Systems:

“Interpretation” is adopted as a generic category that broadly means to describe a working system. The most rudimentary form is simply identifying some unknown system from its behavior. Note that an identification strictly speaking involves a specification of constraints under which the system operates and a design (structure/process model). In practice, our understanding may not include a full design, let alone the constraint s it must satisfy (consider the metarules of HERACLES (Section 6.1) versus our vague understanding of why they are reasonable). Examples of programs that identify systems are:

DENDRAL: System = molecular (structure) configuration (Buchanan, et al., 1969) (given spectrum behavior of the molecule).

PROSPECTOR: System = geological (formation) configuration (Hart, 1977) (given samples and geophysics behavior).

DEBUGGY: system = knowledge (program) configuration of student’s subtraction facts and procedure (Burton, 1982) (given behavior on a set of subtraction problems).

l “Prediction” is adopted directly. Note that prediction, specifically simulation, may be an important technique underlying all of the other operations (e.g., using simulation to generate and test possible diagnoses).

“Diagnosis” is adopted directly as a kind of IDENTIFICATION, with some part of the design characterized as faulty with respect to a preferred model.

“Design” is taken to be the general operation that embraces both a characterization of structure (CONFIGURATION) and process (PLANNING).

“Monitoring” is adopted directly as a kind of IDENTIFICATION, with system behavior checked against a preferred, expected model.

‘*Debugging” is dropped, deemed to be equivalent to DIAGNOSIS plus MODIFY.

“Repair” is more broadly termed MODIFY; it could be characterized as transforming a system to effect a redesign, usually prompted by a diagnostic description. MODIFY operations are those that change the structure of the system, for example, editing a program or using drugs (or surgery) to change a living organism. Thus, MODIFY is a form of *‘reassembly” given a required design modification.

The idea of “executing a plan” is moved to the more general term ASSEMBLE, meaning the physical construction of a system. DESIGN is conceptual; it describes a system in terms of spatial and temporal interactions of components. ASSEMBLY is the problem of actually putting the system together in the real world. For example, contrast Rl'S problem with the problem of having a robot assemble the configuration that Rl designed. ASSEMBLY is equivalent to planning at a different level, that of a system that builds a designed subsystem.

“Instruction” is dropped because it is a composite operation that doesn’t apply to every system. In a strict sense, it is equivalent to MODIFY.

In addition to the operations already mentioned, we add SPECIFY-referring to the separable operation of constraining a system description, generally in terms of interactions with other systems and actual realization in the world (resources affecting components). Of course, in practice design difficulties may require modifying the specification, just as assembly may constrain design (commonly called “design for manufacturing”).

5.4. Configuration and planning

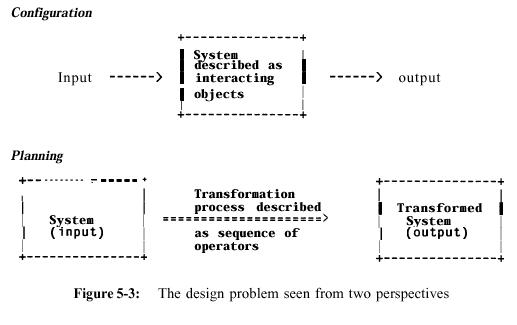

The distinction between configuration and planning requires some discussion. We will argue that they are two points of view for the single problem of designing a system. For example, consider how the problem of devising a vacation travel plan is equivalent to configuring a complex system consisting of travel, lodging, restaurant, and entertainment businesses and specifying how that system will service a particular person or group of people. “Configure” views the task as that of organizing objects into some system that as a functioning whole will process/transform another (internal) system (its input). “Plan,” as used here, turns this around, viewing the problem in terms of how an entity is transformed by its interactions with another (surrounding) system. Figure 5-3 illustrates these two points of view.

VLSI design is a paradigmatic example of the “configuration” point of view. The problem is to piece together physical objects so that their behaviors interact to produce the desired system behavior.

The “planning” point of view itself can be seen from two perspectives depending on whether a subsystem or a surrounding global system is being serviced:

While we appear to have laid out three perspectives on design,

they are all computationally equivalent. It’s our point of view about purpose

and structuredness of interactions that makes it easier to understand a system

in one way rather than another. In particular, in the first form of planning,

the serviced subsystem is getting more organized as a result of its interactions.

The surrounding world is modified in generally entropy-increasing ways as its

resources are depleted. In the second form of planning the serviced world is

getting more organized, while the servicing subsystem depletes its resources.

Without considering the entropy change, there is just a’ single point of view

of a surrounding system interacting with a contained subsystem.

“Configuration” is concerned with the construction of well-structured

systems. In particular, if subsystems correspond to physically-independent components,

design is equivalent to organizing pieces so they spatially fit together, with

flow from input to output ports producing the desired result. (Note that Rl

is given some of the pieces, not the functional properties of the computer system

it is configuring. The functional design is implicit in the roster of

pieces it is asked to configure-the customer’s order.) It is a property of

any system that can be described in this way that it is hierarchically decomposable

into modular, locally interacting subsystems-the definition of a well-structured

system. As Simon (Simon, 1969) points out, it is sufficient for design tractability

for systems to be “nearly decomposable,” with weak, but ‘non-negligible interactions

among modules.

Now, to merge this with the conception of “planning,” consider

how an abstract process can be visualized graphically in terms of a diagram

of connected operations. The recent widespread availability of computer graphics

has revolutionized how we visualize systems (processes and computations). Examples

of traditional and more recent attempts to visualize the structure of processes

are:

Flowcharts. A program is a system. It is defined in terms of a sequence of operations for transforming a subsystem, the data structures of the program. Subprocedures and sequences of statements are subsystems that are structurally blocked and connected.

Automata theory. Transition diagrams are one way of describing finite state machines. Petri nets and dataflow graphs are other, related, notations for describing computational processes (see (Sowa, 1984) for discussion).

Actors. A system can be viewed in terms of interacting, independent agents that pass messages to one another. Emphasis is placed on rigorous, local specification of behaviors (Hewitt, 1979). Object-oriented programming (Goldberg and Robson, 1983) is in general an attempt to characterize systems in terms of a configuration, centering descriptions on objects that are pieced together, as opposed to centering on data transformations.

Thinglab. (Borning, 1979) emphasized the use of multiple, graphic views for depicting a dynamic simulation of mutually constrained components of a system. Borning mentions the advantages of visual experimentation for understanding complex system interactions.

Rocky’ s Boots. -In this personal computer game, icons are configured to define a program, such as a sorting routine that operates on a conveyor belt. Movement icons permit automata to move around and interact with each other, thus describing “planning” (how systems will interact) from a “configuration” (combination of primitive structures) point of view.

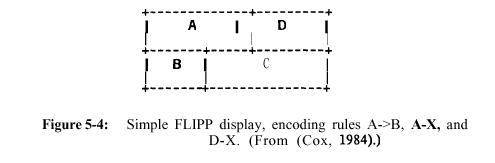

FLIPP Displays. Decision rules can be displayed in analog form as connected boxes that are interpreted by top-down traversal (see Figure 5-4). Subproblems can be visually “chunked”; logical reasoning can be visualized in terms of adjacency, blocking, alternative routes, etc. Characteristic of analog representations, such displays are economical, facilitating encoding and perception of interactions (Mackinlay and Genesereth, 1984).

Streams. The structure of procedures can be made clearer by describing them in terms of the signals that flow from one stage in a process to another (Abelson, et al., 1985). Instead of modeling procedures in terms of time-varying objects (variables, see “planning” in Figure 5-3), we can describe procedures in terms of time-invariant streams. For example, a program might be characterized as ENUMERATE + FILTER + MAP + ACCUMULATE, a configuration of connected subprocesses. Stream descriptions, inspired by signal processing diagrams, allow a programmer to visualize processes in a succinct way that reveals the structural similarity of programs.

These examples suggest that we have not routinely viewed “planning”

problems in terms of system “configuration” because we have not had adequate

notations for visualizing interactions. In particular, we have lacked tools

for graphically displaying hierarchical interactions and movement of subsystems

through a containing system. Certainly a large part of the problem is that interactions

can be opportunistic, so the control strategy that affects servicing (in either

form of planning) is not specifiable as a fixed sequence of interactions. The

inability to graphically illustrate flexible strategies was one limitation of

the original Actors formalism (Hewitt, 1979). On the other hand, control strategies

themselves may be specifiable as a hierarchy of processes, even though they

are complex and allow for opportunism. The representation of procedures in HERACLES

(Section 6.1) as layered rule sets (corresponding to tasks) (with both data-directed

reasoning encoded as a separate set of tasks and inherited “interrupt” conditions)

is an example of a well-structured encoding of an opportunistic strategy. More

generally, strategy might be graphically visualized as layers of object-level

operations and agenda-processing operations.

In general, a configuration point of view is impossible when

physical or planning structures are unstable, with many global interactions

(Hewitt, 1979). It is difficult or impossible to plan in such a world; this

suggests that most practical planning problems can be characterized in

terms of configuration. It is interesting to note that replacing state descriptions

(configurations) with process descriptions has played an important role in scientific

understanding of the origins of systems (Simon, 1969). As illustrated by the

examples of this section, to understand these processes, we return to a configuration

description, but now at the level of the structure of the process instead of

the system it constructs or interprets.

5.5. Combinations of system problems

Given the above categorization of construction and interpretation problems, it is striking that expert systems tend to solve a sequence of problems pertaining to a given system in the world. Two sequences that commonly occur are:

The Construction Cycle: SPECIFY + DESIGN {+ ASSEMBLE}

An example is Rl with its order processing front-end, XSEL. Broadly speaking,selecting a book for someone in GRUNDY is single-step planning; the person is “serviced*’ by the book. Other examples are selecting a wine or a class to attend. The common sequence of terms in business, “plan and schedule,” are here named SPECIFY (objectives) and PLAN (activities).

The Maintenance Cycle: {MONITOR + PREDICT +} DIAGNOSE + MODIFY

This is the familiar pattern of medical programs, such as MYCIN. The sequence of MONITOR and PREDICT is commonly called test (repeatedly observing system behavior on input selected to verify output predictions). MODIFY is also called therapy.

This brings us back to the BES table (Figure 5-l), which characterizes INSTRUCTION and CONTROL as a sequence of primitive system operations. We can characterize the expert systems we have studied as such sequences of operations:

MYCTN = MONITOR (patient state) + DIAGNOSE (disease category) + IDENTIFY(bacteria) + MODIFY (body system or organism).

GRUNDY = IDENTIFY (person type) + PLAN (reading plan)

SACON = IDENTIFY (structure type) + PREDICT (approximate numeric model) +IDENTIFY (classes of analysis for refined prediction)

SOPHIE = MONITOR (circuit state) + DIAGNOSE (faulty module/component)

When a problem solver uses heuristic classification for multiple steps, as

in GRUNDY, we say that the problem-solving method is sequential heuristic classification.

Solutions for a given classification (e.g., stereotypes of people) become data

for the next classification step. Note that Mycin does not strictly do sequential

classification because it does not have a well-developed classification of patient

types, though this is a reasonable model of how human physicians reason. However,

it seems fair to say that MYCIN does perform a monitoring operation in that

it requests specific information about a patient to characterize his state;

this is- clearer in NEOMYCIN and CASNET where there are many explicit, intermediate

patient state descriptions. On the other hand, SOPHIE provides a better example

of monitoring because it interprets global behavior, attempting to detect faulty